.png)

AiSight Guide

AiSight Guide

Product Designer / Capstone Project / Apr 2025 - Jun 2025

Overview

Assistive technology like magnifiers and screen readers have transformed internet accessibility for visually impaired or blind individuals. Using high contrast ratios and text to speech readers, low or no vision people have greater freedom to browse the internet. However, not all websites and apps follow accessibility guidelines, and even the ones that do still have elements that are not compatible with assistive technology. Visually complex or image heavy pages are other areas where screen readers are limited. There is a greater need for more advanced accessible solutions that can help make the internet equitable for all users.

Product Concept

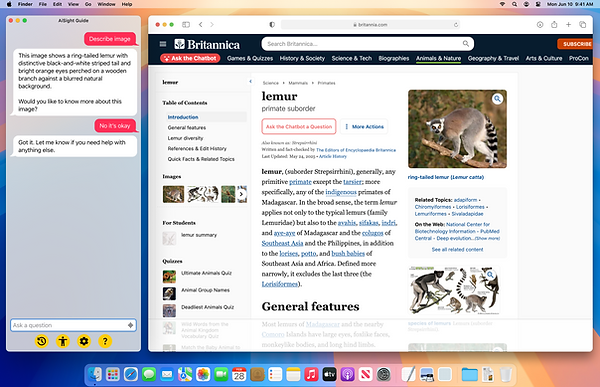

AiSight Guide is a desktop assistant that uses AI to recognize images and read text to act as a sighted guide for low or no vision users. By taking screenshots and using the webpage’s source code, AiSight Guide will provide users with a context rich description of web pages that will better help them locate and navigate through a new website. They can also ask for detailed image descriptions when there isn’t one provided. Users can dictate tasks or type questions and the assistant can respond accordingly either through text or text to voice.

By harnessing computer use AI, users will also be able to have the assistant take control over their computer’s cursor movement, click, and perform other tasks like filling out a form. Although this technology is still very developmental, the technology will only improve and could represent a revolution in computer accessibility.

Problem Identification and Research

To gather more information and better inform the product's design, I talked with three members of the low vision/blind community. These users regularly use technology for their work or school and all use some form of digital assistive technology. I asked the following interview questions to better understand their current process and general sentiment regarding AI in accessibility.

Interview Questions

-

How frequently do you use assistive technology in your day to day life? Are there any limitations?

-

What is your experience with navigating new websites or digital environments? How long does it take to learn a new website or digital environment?

-

What makes a website feel "well designed" from an accessibility perspective?

-

When do you feel the need to reach out for sighted assistance?

-

How frequently do you encounter websites with poor labels, without image description or do not meet your accessibility requirements

-

Do currently you use any AI tools to help with accessibility online or in real life? If yes, what method of interaction do those tools use and are they effective?

Key research findings

Throughout the conversations, I found some common experiences and struggles between all the users. Although each interviewee had different needs depending on their vision level, navigating the internet remained a difficult challenge no matter what assistive tools they used.

Identified User Pain Points

-

Inaccurate image descriptions or lack of detail

-

Complex website layouts that are not compatible with accessibility tools, e.g. not using hierarchical semantics

-

Navigating through web pages sequentially can be very time consuming

-

Inconsistency with following accessible guidelines even within a single website/app

-

Learning curve to use screen readers, especially if they are new to the technology

Research Observations

-

Visually impaired users have the most difficulty with links and buttons that don’t get picked up by screen readers or are not flagged as clickable to the software

-

Detailed navigation and lots of menus are also difficult to navigate

-

Tools with a high learning curve are not preferable, even if they are beneficial

-

Although some users can utilize text, auditory response is more universal

-

Users want to maintain a level of customization to descriptions

-

Users prioritize accuracy when they try AI or other accessibility tools

Main Findings

-

Accessibility tool compatibility is inconsistent - Links, buttons, and complex layouts often aren't properly recognized by screen readers, creating significant navigation barriers

-

Sequential navigation creates major inefficiencies - Users face time-consuming processes when moving through detailed menus and complex website structures

-

Accuracy is the top priority- Users prioritize precise, detailed descriptions over speed when using AI or accessibility tools

Understanding Our Users

Based on interviews with visually impaired individuals, I created these two personas to represent the primary users of AiSight Guide. While both Ali and Sarah face similar accessibility challenges online, they bring different levels of technical expertise, work contexts, and personal approaches to using assistive technology.

Ali represents the experienced user who has mastered existing tools and expects high standards from new technology. Sarah represents users who are still building their assistive technology skills while balancing professional demands. Together, these personas help illustrate the range of needs and preferences that AiSight Guide must address to truly serve the visually impaired community.

.png)

Competitive Analysis

Company

Overview

Design Notes

TypeAhead AI

A desktop application that works as a screen reader assistant. Uses AI to help navigate the UI, read text or provide context, and complete actions

-

Simple chat modal that stays at the front of the screen, resembles the iMessage interface

-

Accessible through keyboard shortcuts and works with Apple’s Voiceover software

-

Some autopilot capabilities, but advertised on only three other applications

AccessiBe

All in one platform to maintain web accessibility standards. Helps update your website to be compliant and allows you to insert a widget to toggle accessibility settings

-

Accessibility toggles for disabilities, neurodivergence, and visual needs

-

Uses an overlay interface and small button to open. This button is not always very visible

-

Mainly for business use, Companies must purchase the service to provide its tools

PictureSmartAI

A new feature of JAWS Windows screen reader that can supplement images that lack alt text

-

Access through keyboard shortcut

-

Shows a popup window with generated image description

-

UI is fairly basic and not too polished

Claude AI

Anthropic’s chat interface

-

Chat interface takes user prompts and performs the appropriate task

-

Mostly text based interaction, would work with screen reader or text to voice

There are not many software tools on the market that can act as a desktop assistant, besides TypeAhead AI. Many tools are dependent on a company buying a software subscription in order to integrate accessibility features to their sites. While that is a great step, small businesses may not have the foresight to invest in these features for themselves. With different companies offering different types of tools, the internet webscape is still inconsistent for disabled users.

Claude AI and ChatGPT are quick solutions but require some maneuvering from the user. Both can extrapolate information from web screenshots very well. Although the process is a bit clunky, a smoother interaction would help make these models more usable to disabled users.

Design Considerations

The main purpose of this application is to be an enhancement or boost to existing accessible features. Users are currently able to adjust the system preferences in their OS to fit their preferences in color, contrast, and screen resolution. The application shouldn’t fight or replace those settings and instead adhere to them. Along the same lines, most operating systems have some version of a screen reader. This system shouldn’t replace the screen reader, just fill in gaps when websites don’t contain the right information for it to read. Within the application itself, all the text and buttons should be screen reader compatible and comply with the OS’s native accessibility settings.

Balancing User Control and Automation

Keeping a balance of control and automation is important for users to feel that the system is helping without taking over everything. Users may have also developed familiarity with their current accessibility tools and only need help in areas where they fail. The app should give options to how much users want to take advantage of its AI features.

Image Guide

Missing image description is a big pain point for users as they encounter little to no description half the time. If image description is available and users want to have more detail, AI Sight Guide can give a longer description that enhances the original description. Users can adjust the level of detail they want by asking for follow up.

User Control: Give a baseline level of description, like 2-3 sentences and see how users respond. They can ask for more detail if they need it. Starting out, it’s better to be less verbose and increase the detail, rather than getting overwhelmed with too much.

Automation: Turn on a setting that has the AI calibrate its descriptions based on how often the user asks for more detail or the type of images they want more details on. Users that consistently want more detail may tire of having to ask for follow up details every time. They can opt into a setting that will have the AI learn from their patterns.

Navigation Guide

With Computer Use AI, users can direct the app to control their screen to perform certain tasks and help them navigate. The range of control AI has over the computer is directly related to the user’s commands. The system should dictate and output a text trail for when it begins a task, to its completion.

*Note: Computer Use AI is still very developmental so this design is working under the assumption of a fully released version that will come in the future.

User Control: Users ask Ai Sight Guide to help them find or click certain elements on a page. AI will not make any kind of navigation control without the user’s prompting it for help. Users should also be able to stop control whenever they want.

Automation: Computer Use can also autopilot on users’ behalf. This can help users perform tasks or fill form forms. If AI comes to a point where it isn’t sure about something or how to proceed, it can ask the user to confirm or update their instructions.

Shortcut Commands

The system can save frequently used commands or requests so the user doesn’t have to type or dictate their question again.

User Control: Users can manually create their own shortcuts in the app settings. They can also update or remove existing shortcuts.

Automation: The system can recommend saving a shortcut based on past queries and the user’s behavior. The user is still free to save or ignore these recommendations.

Fostering User Trust

One unique aspect of the disabled community is that users are usually pretty willing to try new tools. Having more options for accessibility is always preferable, even if some tools are not for everyone. Users are also more accustomed to dealing with a learning curve, or exercising patience with digital tools that abled users might not need. With that said, it is important not to take people’s good will and patience for granted. Repeated mistakes or bad design will still disqualify a product from use.

Clear Onboarding

Given the unfamiliarity users will have, clear onboarding is essential. When users first start up the application, they should be welcomed with a quick start guide. The guide will help set the expectations for the app as well as teach users how to use it. As they move through the tutorial, they will get the chance to test and verify the results of the AI in a controlled environment, building their initial trust in the application.

Transparency

The app should provide clear explanations through the text interface and dictate its actions. This way the user is always clear on what the system is working on. This is especially important for users without vision since they will not have visual indicators to show them what is happening.

User Control

Users can set their baseline preferences or let the system utilize adaptive features that can tailor some of its outputs based on user behavior. For instance, learning what level of detail the user prefers. They should always be able to follow up if they aren’t fully satisfied with the first answer as well. By customizing the app to their personal preferences, they can retain control and avoid overreliance.

Incorporating Feedback Loops

Since users may want different levels of detail in different scenarios, they may need to tell the AI to give them more or less description. In the beginning, the AI system can ask the user if they have any feedback about the description or would like to know more. This opens the opportunity for users to get more information and help refine the system to their preferences.

Security and Privacy

User data will be kept secure and the system should only save information relevant to learning the user’s preferences and navigation habits. Personal and browsing information should not be retained. The app will also disclose what type of data is being saved and used for the AI system to learn. If users do not want to use some of the adaptive learning features, they can opt out from collecting this data.

Ethics and Societal Impact Review

AiSight Guide is designed for the overall good of internet accessibility and improving access. However without foresight into potential misuse and consideration of long term impact, the app would become a band aid for user issues without truly solving their problems. Through proactive examination of ethical and societal risks during the design phase, developers can implement safeguards and structural considerations that mitigate these concerns before deployment, ultimately creating more sustainable and equitable accessibility solutions.

Ethical Risks

Dependency and Skill Atrophy

Risk: Users become overreliant on AI to navigate instead of developing independent navigation skills. While AiSight Guide can be a powerful tool, if users need to use a device without the app, they should still maintain the skills to do so.

Mitigation: Instill user agency and empowerment. Rather than increasing reliance on AI, the app encourages users to independently navigate. The app should also have checks for users to confirm what they need instead of AI making decisions on their behalf.

Implementation: With training modes that promote digital independence, AiSight Guide can help users keep their skills in good shape. Like if they use a screen reader, it can prompt users to use screen reader commands to complete certain actions. Navigation should turn over to the user to make decisions and clicks.

Privacy and Surveillance

Risk: AiSight Guide needs to oversee what content the user is browsing. If they log into their accounts, passwords could be exposed. They may also log into financial or medical accounts with sensitive data. This also raises concern over data privacy, tracking behavior, user consent, and other potential misuse.

Mitigation: In order to reduce data risks, data should be encrypted and retained for the shortest amount of time necessary. Processing and data storage should be done locally as much as possible. The app should also only retain data relevant to navigation support, like user preferences, not actual browsing data.

Implementation: To protect user data, the app should establish some technical Safeguards. Users can opt to use a safe mode that does not store any data from their session. They should also have clarity in what data gets collected and have the option to opt out. A transparent and clear policy that discloses who, why, and how data is stored and transferred will also be provided.

Misrepresented Needs

Risk: UI based on sighted experience, or how a sighted user perceives disabled experience may not fulfill their needs. As a sighted user, it is impossible to capture and understand the needs and nuances of the disabled community.

Mitigation: Inclusive development is of the utmost importance to this product’s success. Designing for diverse disability experiences means involving disabled users throughout the process.

Implementation: Disabled users would be consulted from ideation to testing. Involving the disabled community early in the process would ensure their needs and frustrations are addressed.

AI Bias and Inaccuracy

Risk: Users with low or no sight are unable to visually confirm whether the descriptions or AI control is completely accurate. They may be led to believe something that is untrue or there may be hidden biases in the description.

Mitigation: Integrating user feedback and incorporating diverse perspectives will help reduce some of these gaps. More research can help form a set standard for how to describe images.

Implementation: Users can validate if the results use opinionated language or give a sense of value (ex. ugly or beautiful). Those with usable vision can correct AI if a description is not fully accurate as well. The system should also gather context from the webpage contents and pre-existing alt text to form a more accurate description. To adopt a standard method and language use for the system, testing and research should be done before the product is released to ensure the algorithm remains consistent and as factual as possible.

Societal Risks

Accessibility Regression

Risk: With powerful AI tools, companies may feel less pressure to implement accessibility features and design in their websites. Accessibility awareness may also decrease if there is less accountability.

Mitigation: AiSight Guide can help reduce digital inequity, not increase it. Outside of helping disabled users, there should be an external push to improve standards at an industry level.

Implementation: The AI system may spot websites that lack accessibility or do not fulfill WCAG and push them to be compliant. Advocacy could also include providing guides and training to developers and designers for accessibility practices. By maintaining relationships and partnering with disability communities and advocacy groups, AiSight Guide can help lobby for better access.

Accessibility Gatekeeping

Risk: Advanced accessibility tools become locked behind paywalls, meaning unequal access.

Mitigation: Committing to an ethical business model will reduce issues of access. There should always be a version that is free to use and available to all.

Implementation: Tiered pricing with a free version will ensure a balance between profitability and access. Paying users can unlock more features, but the quality or base features should always be the same. Premium access should be limited to features that are “nice to have” but not “necessary.” In other words, users should not have to pay more in order to have a better user experience.

Loss of Community Support

Risk: Users choose AI assistance over community based accessibility support resulting in social isolation and weakening community connection.

Mitigation: Integrating community connections and human support resources directly into the AI experience will help maintain social bonds.

Implementation: AiSight Guide should include features that connect users to disability communities, accessibility advocates, and peer support networks. The AI can suggest relevant forums, local organizations, or mentorship programs when users encounter challenges. Additionally, the app could incorporate collaborative features where users can share navigation tips or request help from other community members, ensuring technology enhances rather than replaces human connection.

Ethics Review Conclusion

AISight Guide would have a powerful impact in making the internet truly accessible for all. This tool addresses critical needs from overlooked communities and helps them navigate digital spaces faster and more easily. In designing this tool, it is imperative to follow ethical guidelines on user data, privacy, potential AI bias and misrepresentation. Accessibility tools also have justice and civil rights implications that may impact society as a whole. By leveraging partnerships and allying with disabled communities, AiSight Guide can also advocate for accessibility to the wider tech community. These measures will help mitigate risks to the user and help uplift the disabled community.

Designing the Interaction

I focused on three main ways people would interact with AiSight Guide: getting started with the app, using the image guide, and using the navigation guide. I wanted to make sure these experiences would actually work for users and feel natural when interacting with AI. Since the app needed to work well with assistive technology, I figured the best way to understand this was to try it myself. I used VoiceOver (MacOS's screen reader) for the first time and went through the entire twenty-step tutorial. It was eye-opening to experience how visually impaired users navigate their devices, and it really helped me understand what makes an interface easy to learn. This hands-on approach shaped how I designed the interactions to be both intuitive and accessible.

Onboarding Process

Using a controlled environment and step by step tutorial will give users clear expectations of what they can expect from the application. The simplified interface in the tutorial will also slowly introduce them to important features. The practice area will be disabled until the user starts the tutorial. As they move through the steps, relevant areas will become enabled for them to continue. After the user finishes the tutorial, they can move onto the next step.

Image Guide and Adaptive Learning

Users can turn on additional AI learning that will help the system write better descriptions based on when the user asks for more detail. It will also take the type of image into account (like if it is decorative, enhances information, is a product) based on context from the webpage.

With the learning feature on, the system will remember which images need a higher level of detail. Over time, it will get better at discerning when to give longer or shorter descriptions. The system will prompt the user for feedback and incorporate their preferences in future answers. Users will always be able to ask for additional details or follow up questions.

Wireframes

Mockups

Navigation Guide

Many webpages that have inaccessible features or complex layouts are hard to navigate for users with less vision, or with screen readers. There are slightly different challenges between the two that might affect how they would navigate.

According to my conversations and discovery into some of these challenges, screen readers cannot help users reconcile what is visually on the page and what is available in the source code. For example, screen readers can give users a list of headings, form controls, and links on the page based on their HTML tags. However, if developers ignore conventional hierarchical structure and labels, the program will not present it to the user. As sighted users, we can visually determine when something is a heading or button, and it doesn’t matter if it is mislabeled in the code. AiSight Guide will act as the visual medium for users, helping them track down mislabeled elements that a screen reader cannot detect.

This is an intentionally inaccessible webpage made by https://dequeuniversity.com/demo for demo/educational purposes.

This webpage would be fairly simple for a sighted user to navigate, however users with vision issues would have more difficulty. I asked Claude AI to help me spot potential accessibility concerns by examining the page visually and its source code. Some of its findings included concerns with navigation and controls being difficult for keyboard users, a custom datepicker on the form, small buttons, icons lacking description, and complex dropdown menus (full list at the end). I also found the hero image’s overlaying text difficult to read as a fully sighted person, let alone someone that requires high contrast visuals. The company logos below the hero image are also low contrast and hard to distinguish when I squint my eyes.

To better understand the screen reading experience, I turned on VoiceOver. I found I wasn’t able to interact with the navigation items because the dropdown is only shown when my cursor hovers the element. A keyboard only user would have a hard time finding the dropdown menu. (Disclaimer: I have very little experience with VoiceOver and I still rely heavily on my vision while I use it. There may be shortcuts or faster ways to navigate that I am unaware of.)

An AI system can help examine the dropdown contents based on the user’s prompting and subsequently help them if they need to interact with the dropdown. I tested this out with Claude to tell me what was in the menu. It provided all the contents in the menu and the current selections (full list below). For this instance, I asked it to examine the source code as well, since the menu does not open without the user hovering over the item. AI can also help reorganize horizontal layout into a more screen reader friendly vertical format. This menu item is in top down order, but the Language and Currency sections are horizontal. This means a user would have to tab through all the Language selections and then jump over to the Currency section. AI simply describes all these elements in a vertical list.

Breakdown of Navigation Menu

Looking at the source code, the "English USD nav item" (the locale-and-currency dropdown) contains these items:

Main display shows:

-

US flag icon (SVG)

-

"English" (language)

-

"$" symbol + "USD" (currency)

When clicked, the dropdown menu contains:

Choose a country section: A dropdown with 50+ countries including Argentina, Australia, Belgium, Brazil, Canada, France, Germany, etc. (with "United States" currently selected)

Language section:

-

English ✓ (currently selected)

-

Deutsch

-

Italiano

-

Español

-

Français

Currency section:

-

GBP (with £ symbol)

-

EUR (with € symbol)

-

USD (with $ symbol) ✓ (currently selected)

Bottom:

-

"Apply" button to save changes

The dropdown allows users to change their country, language, and currency preferences for the booking experience.

Filling out Forms

Another area where users may have difficulty is with forms. These are a couple ways that users may interact with AiSightGuide to help with filling out a form. Some users might not need as much assistance and they may have enough usable vision to comfortably fill out a form themselves. They might instead just need assistance with locating certain elements or reading illegible text. AI can help visually locate what they need enough for them to continue their task.

Other users may want more navigation help. I found that the datepicker for this website did not work very well with VoiceOver and I had to tab through all the rows and numbers to find the date I needed. Instead, AI can see what is visually available on the page and help the user make their selections more quickly.

Wireframes

Mockups

Looking to the future

Overall, AI’s capabilities in enhancing accessibility are very promising and could lead to a lot of advancement. Current tools and screen reading technology work well, however they also become limited if websites are made imperfectly. Accessible design is the baseline priority, however, even the most accessible websites may not be easy to use without some sight. AiSight Guide helps fulfill a much needed gap between screen reading and visual context that will help users move through online spaces much faster.

I also see use cases in the future where the navigation guide could assist people with motor issues, the elderly, or help new users learn how to use the computer. These ventures would take additional research and consideration into understanding their needs and pain points, however the impact AI could have for these groups is significant. I personally hope to see further development and more companies commit to making our devices accessible for all.